Opened 7 years ago

Closed 5 years ago

#1689 closed defect (fixed)

Writing connections leak with http/2

| Reported by: | Nar | Owned by: | |

|---|---|---|---|

| Priority: | major | Milestone: | |

| Component: | nginx-core | Version: | 1.15.x |

| Keywords: | http/2, http2 | Cc: | |

| uname -a: | Linux CentOS-72-64-minimal 3.10.0-514.2.2.el7.x86_64 #1 SMP Tue Dec 6 23:06:41 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux | ||

| nginx -V: |

nginx version: nginx/1.15.7

built by gcc 4.8.5 20150623 (Red Hat 4.8.5-28) (GCC) built with OpenSSL 1.0.2k-fips 26 Jan 2017 TLS SNI support enabled configure arguments: --prefix=/etc/nginx --sbin-path=/usr/sbin/nginx --modules-p ath=/usr/lib64/nginx/modules --conf-path=/etc/nginx/nginx.conf --error-log-path= /var/log/nginx/error.log --http-log-path=/var/log/nginx/access.log --pid-path=/v ar/run/nginx.pid --lock-path=/var/run/nginx.lock --http-client-body-temp-path=/v ar/cache/nginx/client_temp --http-proxy-temp-path=/var/cache/nginx/proxy_temp -- http-fastcgi-temp-path=/var/cache/nginx/fastcgi_temp --http-uwsgi-temp-path=/var /cache/nginx/uwsgi_temp --http-scgi-temp-path=/var/cache/nginx/scgi_temp --user= nginx --group=nginx --with-compat --with-file-aio --with-threads --with-http_add ition_module --with-http_auth_request_module --with-http_dav_module --with-http_ flv_module --with-http_gunzip_module --with-http_gzip_static_module --with-http_ mp4_module --with-http_random_index_module --with-http_realip_module --with-http _secure_link_module --with-http_slice_module --with-http_ssl_module --with-http_ stub_status_module --with-http_sub_module --with-http_v2_module --with-mail --wi th-mail_ssl_module --with-stream --with-stream_realip_module --with-stream_ssl_m odule --with-stream_ssl_preread_module --with-cc-opt='-O2 -g -pipe -Wall -Wp,-D_ FORTIFY_SOURCE=2 -fexceptions -fstack-protector-strong --param=ssp-buffer-size=4 -grecord-gcc-switches -m64 -mtune=generic -fPIC' --with-ld-opt='-Wl,-z,relro -W l,-z,now -pie' |

||

Description

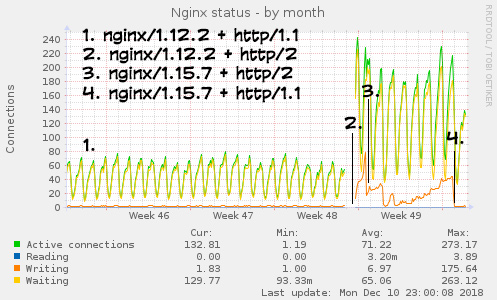

Writing connections counter is gradually grow up as shown on munin graph after switch to http/2 with nginx 1.15.7 and clear only after service nginx restart.

I think that #1650 and #1610 is not my case, becouse these thikets do not associated with switching to http/2 — after disabling http/2 in my case everything is OK. I do not have ModSecurity as in #1650, do not have stream {} in config file as noted in ticket:1610#comment:1, max_size for proxy_cache_path in not configured as in #1163, but in logs I got some open socket ... left in connection ... alerts on reload.

Attachments (5)

Change History (28)

comment:1 by , 7 years ago

comment:3 by , 6 years ago

I see the same problem on a bunch of my servers. I managed to catch those events while running nginx with debug log, but the log is huge, about 6G. I don't think I'll be able to attach it here even gzipped. Is there anything I can filter out to make it smaller?

comment:4 by , 6 years ago

First of all, you may start with filtering only logs from a particular worker process. For example, if you see a message like this:

2019/11/20 19:03:42 [alert] 11121#100115: *2 open socket #3 left in connection 4

You may grep for 11121# - it identifies particular nginx worker process. Also, *2 identifies the connection within the particular nginx instance, so you can grep for 11121#100115: *2 , to find out all connection-specific log lines, for example:

$ grep -F ' 11121#100115: *2 ' error.log 2019/11/20 19:03:11 [debug] 11121#100115: *2 accept: 127.0.0.1:41524 fd:3 2019/11/20 19:03:11 [debug] 11121#100115: *2 event timer add: 3: 60000:56144712 ... 2019/11/20 19:03:16 [debug] 11121#100115: *2 http process request header line 2019/11/20 19:03:42 [alert] 11121#100115: *2 open socket #3 left in connection 4

Note though that it is unlikely that only connection-specific log lines will be enough to debug the problem, but they can be used to provide a time frame for further filtering - you can only keep log lines relevant to the time frame of a particular connection.

Please also provide nginx -V and nginx -T output.

by , 6 years ago

| Attachment: | nginx.debug.opensocket.26930.0.250.log.gz added |

|---|

comment:5 by , 6 years ago

There you go:

nginx version: nginx/1.16.1

built by gcc 4.4.7 20120313 (Red Hat 4.4.7-17) (GCC)

built with OpenSSL 1.0.2p 14 Aug 2018

TLS SNI support enabled

configure arguments: --prefix=/etc/nginx --sbin-path=/usr/sbin/nginx --modules-path=/usr/lib64/nginx/modules --conf-path=/etc/nginx/nginx.conf --error-log-path=/var/log/nginx/error.log --http-log-path=/var/log/nginx/access.log --pid-path=/var/run/nginx.pid --lock-path=/var/run/nginx.lock --http-client-body-temp-path=/var/cache/nginx/client_temp --http-proxy-temp-path=/var/cache/nginx/proxy_temp --http-fastcgi-temp-path=/var/cache/nginx/fastcgi_temp --http-uwsgi-temp-path=/var/cache/nginx/uwsgi_temp --http-scgi-temp-path=/var/cache/nginx/scgi_temp --user=nginx --group=nginx --with-file-aio --with-ipv6 --with-http_auth_request_module --with-http_ssl_module --with-http_v2_module --with-http_realip_module --with-http_addition_module --with-http_xslt_module=dynamic --with-http_image_filter_module=dynamic --with-http_geoip_module=dynamic --with-http_sub_module --with-http_dav_module --with-http_flv_module --with-http_mp4_module --with-http_gunzip_module --with-http_gzip_static_module --with-http_random_index_module --with-http_secure_link_module --with-http_degradation_module --with-http_slice_module --with-http_stub_status_module --with-http_perl_module=dynamic --with-mail=dynamic --with-mail_ssl_module --with-pcre --with-pcre-jit --with-stream=dynamic --with-stream_ssl_module --with-openssl=openssl-1.0.2p --with-openssl-opt=shared --add-dynamic-module=ngx_http_geoip2_module-2.0 --with-debug --with-cc-opt='-O2 -g -pipe -Wall -Wp,-D_FORTIFY_SOURCE=2 -fexceptions -fstack-protector --param=ssp-buffer-size=4 -m64 -mtune=generic -fPIC' --with-ld-opt=' -Wl,-E'

by , 6 years ago

| Attachment: | nginx.conf.opensocket added |

|---|

comment:6 by , 6 years ago

The configuration and debug log suggests you are using a 3rd party module. Are you able to reproduce the problem without any 3rd party modules?

comment:7 by , 6 years ago

Well, the thing is I won't get any traffic without that module. Since this ticket initially was filed on nginx without third party modules I assumed the module is not the culprit. Also, when I disable http2 in configuration (leaving the module and the rest exactly the same) I don't get any indication of the problem described here.

comment:8 by , 6 years ago

I assumed the module is not the culprit

While there can be a bug in nginx itself, it is more likely to be a problem in a 3rd party module used. Further, even if the bug is in nginx itself, presence of 3rd party modules might greatly complicate debugging. That's why we always ask to reproduce the problem without any 3rd party modules.

On the other hand, looking again into the debug log I think I understand what goes on here, and I was able to reproduce this with vanilla nginx with appropriate configuration. Thanks for the debug log.

comment:10 by , 6 years ago

Please try the following patch:

# HG changeset patch

# User Maxim Dounin <mdounin@mdounin.ru>

# Date 1575380920 -10800

# Tue Dec 03 16:48:40 2019 +0300

# Node ID f0a901125b2183514c8b1944c5a52832847cfce6

# Parent d13eddd9e2529b4bc30dc00aad959bd10ced4c33

HTTP/2: fixed socket leak with queued frames.

If a stream is closed with queued frames, it is possible that no further

write events will occur on the stream, leading to the socket leak.

To fix this, the stream's fake connection read handler is set to

ngx_http_v2_close_stream_handler(), to make sure that finalizing the

connection with ngx_http_v2_finalize_connection() will be able to

close the stream regardless of the current number of queued frames.

Additionally, the stream's fake connection fc->error flag is explicitly

set, so ngx_http_v2_handle_stream() will post a write event when queued

frames are finally sent even if stream flow control window is exhausted.

diff --git a/src/http/v2/ngx_http_v2.c b/src/http/v2/ngx_http_v2.c

--- a/src/http/v2/ngx_http_v2.c

+++ b/src/http/v2/ngx_http_v2.c

@@ -4288,8 +4288,9 @@ ngx_http_v2_close_stream(ngx_http_v2_str

fc = stream->request->connection;

if (stream->queued) {

+ fc->error = 1;

fc->write->handler = ngx_http_v2_close_stream_handler;

- fc->read->handler = ngx_http_empty_handler;

+ fc->read->handler = ngx_http_v2_close_stream_handler;

return;

}

comment:11 by , 6 years ago

I rebuilt nginx with the patch, but I still see those errors. I'll attach a new debug log.

comment:12 by , 6 years ago

The new debug log

2019/12/04 18:31:14 [debug] 4802#0: *16280 event timer del: 227: 74035521684 2019/12/04 18:31:14 [debug] 4802#0: *16280 event timer add: 227: 180000:74035580465 2019/12/04 18:31:37 [alert] 4802#0: *16280 open socket #227 left in connection 50

suggests that the "open socket ... left" alert is bogus, and probably is a result of the TERM signal sent to nginx (after a graceful shutdown of the worker process was initiated). Please provide full debug log around 2019/12/04 18:31:37 (this particular second should be enough, though full time period from 18:31:14 to 18:31:37 might be needed if the above assumption is wrong).

follow-up: 15 comment:13 by , 6 years ago

File is too large to fit here. I uploaded it to https://gofile.io/?c=uMKeuQ

comment:14 by , 6 years ago

Also I realized that in my comment above I might have found 'open socket' messages, which appeared after I stopped nginx. So, this one appeared when I ran nginx reload (I'll add another log file).

by , 6 years ago

| Attachment: | nginx.debug.opensocket.5361.0.32556.log.gz added |

|---|

comment:15 by , 6 years ago

Replying to dadmin-admin@…:

File is too large to fit here. I uploaded it to https://gofile.io/?c=uMKeuQ

As previously suggested, these "open socket ... left" alerts are caused by the TERM signal sent to nginx which was already in the process of graceful shutdown:

2019/12/04 18:31:37 [notice] 4782#0: signal 15 (SIGTERM) received from 6081, exiting

That is, these are bogus and safe to ignore.

Attachment nginx.debug.opensocket.5361.0.32556.log.gz added

In this log, the only connection uses HTTP/1.1:

2019/12/04 18:30:21 [debug] 5361#0: *32556 http request line: "GET /h/20181205/1544011353_328982_550544.iph.mp4/seg-147-v1-a1.ts?st=8ReoOobZJNFp5JqW0GAmvA&e=1575455308&a=0&mask=0 HTTP/1.1"

It is clearly unrelated to the HTTP/2 issue in question. Further, last lines look like:

2019/12/04 18:30:22 [debug] 5361#0: *32556 ngx_async_file_read: reading offset 0 size 4096 2019/12/04 18:30:22 [debug] 5361#0: *32556 aio complete:0 @0:4096 /filedata/flv/20181205/1544011353_328982_550544.iph.mp4 2019/12/04 18:30:22 [debug] 5361#0: *32556 ngx_http_vod_handler: done 2019/12/04 18:30:22 [debug] 5361#0: *32556 http finalize request: -4, "/hfi/20181205/1544011353_328982_550544.iph.mp4/seg-147-v1-a1.ts?" a:1, c:3 2019/12/04 18:30:22 [debug] 5361#0: *32556 http request count:3 blk:1 2019/12/04 18:30:22 [debug] 5361#0: *32556 http finalize request: -4, "/hfi/20181205/1544011353_328982_550544.iph.mp4/seg-147-v1-a1.ts?" a:1, c:2 2019/12/04 18:30:22 [debug] 5361#0: *32556 http request count:2 blk:1 2019/12/04 18:30:22 [alert] 5361#0: *32556 open socket #296 left in connection 103

This suggests that the alert is caused by the fact that the module you are using issues an AIO operation without any timers set, and this in turn makes nginx think that shutting down is safe and the connection is leaked - while it is actually not. This is a known problem with AIO (in vanilla nginx it can manifest itself, for example, when using proxy_cache), which usually do not manifest itself because most AIO operations have at least some timers armed, and because AIO operations in nginx are usually fast. Anyway, as explained, this is not a socket leak and not related to this particular ticket.

Please try looking at writing connections counters, as described in the ticket. It should be more or less obvious from the numbers if the patch fixes the issue or not.

comment:16 by , 6 years ago

Yep, I keep watching connection counters. Usually it takes a few days to build up, but so far it looks good.

comment:17 by , 6 years ago

It's been almost a week we are running nginx with this patch and I don't see any connections leak anymore. I believe it has been fixed. Thank you very much!

comment:18 by , 6 years ago

Thanks for testing. The patch is currently under an internal review and will be committed soon.

comment:19 by , 6 years ago

| Resolution: | → fixed |

|---|---|

| Status: | new → closed |

Committed, 82c1339e2637. Thanks to everyone involved.

follow-up: 22 comment:21 by , 6 years ago

| Resolution: | fixed |

|---|---|

| Status: | closed → reopened |

I had this problem, and upgraded nginx to v1.18 (and now to v1.19). Thought it was fixed, although didn't have a way to check it. Noticed today that it's still happening:

$ curl -s http://127.0.0.1/nginx_status

Active connections: 7

server accepts handled requests

481789 481789 484749

Reading: 0 Writing: 7 Waiting: 0

$ sudo service nginx reload

$ sudo tail /var/log/nginx/error.log

2020/07/03 17:07:56 [alert] 7750#7750: *34077 open socket #8 left in connection 2

2020/07/03 17:07:56 [alert] 7750#7750: *253675 open socket #9 left in connection 3

2020/07/03 17:07:56 [alert] 7750#7750: *251368 open socket #4 left in connection 5

2020/07/03 17:07:56 [alert] 7750#7750: *303251 open socket #5 left in connection 6

2020/07/03 17:07:56 [alert] 7750#7750: *438166 open socket #15 left in connection 7

2020/07/03 17:07:56 [alert] 7750#7750: *432925 open socket #14 left in connection 9

2020/07/03 17:07:56 [alert] 7750#7750: aborting

$ curl http://127.0.0.1/nginx_status

Active connections: 7

server accepts handled requests

481853 481853 484813

Reading: 0 Writing: 7 Waiting: 0

$ sudo service nginx restart

$ curl http://127.0.0.1/nginx_status

Active connections: 1

server accepts handled requests

1 1 1

Reading: 0 Writing: 1 Waiting: 0

$ nginx -V

nginx version: nginx/1.19.0

built by gcc 7.4.0 (Ubuntu 7.4.0-1ubuntu1~18.04.1)

built with OpenSSL 1.1.1 11 Sep 2018

TLS SNI support enabled

configure arguments: --prefix=/etc/nginx --sbin-path=/usr/sbin/nginx --modules-path=/usr/lib/nginx/modules --conf-path=/etc/nginx/nginx.conf --error-log-path=/var/log/nginx/error.log --http-log-path=/var/log/nginx/access.log --pid-path=/var/run/nginx.pid --lock-path=/var/run/nginx.lock --http-client-body-temp-path=/var/cache/nginx/client_temp --http-proxy-temp-path=/var/cache/nginx/proxy_temp --http-fastcgi-temp-path=/var/cache/nginx/fastcgi_temp --http-uwsgi-temp-path=/var/cache/nginx/uwsgi_temp --http-scgi-temp-path=/var/cache/nginx/scgi_temp --user=nginx --group=nginx --with-compat --with-file-aio --with-threads --with-http_addition_module --with-http_auth_request_module --with-http_dav_module --with-http_flv_module --with-http_gunzip_module --with-http_gzip_static_module --with-http_mp4_module --with-http_random_index_module --with-http_realip_module --with-http_secure_link_module --with-http_slice_module --with-http_ssl_module --with-http_stub_status_module --with-http_sub_module --with-http_v2_module --with-mail --with-mail_ssl_module --with-stream --with-stream_realip_module --with-stream_ssl_module --with-stream_ssl_preread_module --with-cc-opt='-g -O2 -fdebug-prefix-map=/data/builder/debuild/nginx-1.19.0/debian/debuild-base/nginx-1.19.0=. -fstack-protector-strong -Wformat -Werror=format-security -Wp,-D_FORTIFY_SOURCE=2 -fPIC' --with-ld-opt='-Wl,-Bsymbolic-functions -Wl,-z,relro -Wl,-z,now -Wl,--as-needed -pie'

$ uname -a

Linux nginx-host.company.com 5.3.0-1019-aws #21~18.04.1-Ubuntu SMP Mon May 11 12:33:03 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

$ apt policy nginx | head -n 7

nginx:

Installed: 1.19.0-1~bionic

Candidate: 1.19.0-1~bionic

Version table:

*** 1.19.0-1~bionic 500

500 http://nginx.org/packages/mainline/ubuntu bionic/nginx amd64 Packages

100 /var/lib/dpkg/status

comment:22 by , 6 years ago

Replying to PenelopeFudd@…:

I had this problem, and upgraded nginx to v1.18 (and now to v1.19). Thought it was fixed, although didn't have a way to check it. Noticed today that it's still happening:

The leak reported in this ticket is certainly fixed, at least the one with the debug log provided. There may be other leaks though. If you are seeing a leak and can reproduce it, please provide full configuration and debug log, see comment:1.

Ok, so you observe

open socket ... left in connection ...alerts on reload, and likely this indicate real socket leak (unless you've forced termination of a particular worker process with an additional signal after a reload, which may also result in the same messages).First of all, please make sure you are not using any 3rd party modules. If there are any, please make sure you are able to reproduce the problem without them.

Further, please provide the following information to further debug what is going on:

nginx -T.